Today is a day that the people of Chinese must always remember. Today, 73 years ago, on August 15, 1945, Japanese radio broadcasted the Imperial edict read by Emperor Hirohito himself, announcing unconditional surrender.

73 years ago today

The front page of almost every newspaper in China.

Are written in bold characters.

Japan surrendered

Since July 7, 1937,

The Lugouqiao Incident broke out,

Japanese imperialism set foot on the land of China.

Shijiazhuang was also ravaged by the Japanese aggressors …

▼

On the night of July 7, 1937, when the Japanese army was practicing near Lugou Bridge in the southwest of Beiping, they asked to enter Wanping County for a search on the pretext that a soldier was "missing", but 29 army, the garrison of China, sternly refused. The Japanese army then shot at the defenders of China and shelled Wanping Ancient City. 29 army rose up against Japan. This is the "July 7th Incident" that shocked China and foreign countries, also known as the Lugouqiao Incident. The "July 7th Incident" was the beginning of Japanese imperialism’s full-scale war of aggression against China, and it was also the starting point of the Chinese nation’s full-scale war of resistance.

On July 29th, 1937, Tianjin fell!

On July 31, 1937, Beiping fell!

Baoding fell on September 24, 1937!

In early October 1937, the Japanese army began to attack Shijiazhuang …

After the Japanese invaders occupied Baoding, they went south along the Pinghan Railway. On October 9, 1937, Zhengding was occupied by the Japanese army, and more than 2,000 soldiers of China Army died for their country.

▲ Japanese attack Zhengding city wall

On October 9, 1937, the Japanese army began to attack Shijiazhuang. At 14: 30 on the 10th, Japanese troops occupied Shijiazhuang.

▲ On October 11, 1937, it occupied the Japanese Iwakura Department in Shijiazhuang, Hebei Province.

▲ China army trucks overturned by Japanese bombing.

When Shijiazhuang fell, the Japanese aggressors began to rule brutally.

On October 10, 1937, Shijiazhuang fell. In the early summer of 1938, the Japanese army occupied farmland near Ping ‘an Park in Shijiazhuang city and built a barracks called South Barracks.

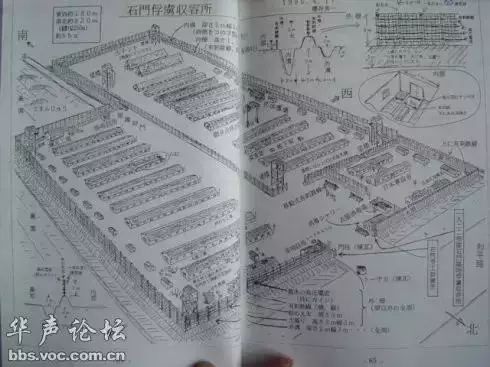

▲ Schematic diagram of Shijiazhuang concentration camp

This is a prisoner-of-war labor concentration camp with the longest history, the largest number of detainees, the worst persecution and the fiercest struggle in China.

The South Barracks successively arrested and detained about 50,000 anti-Japanese soldiers, civilians and innocent people. Among them, about 30 thousand people were sent to North China, Northeast China and Japan as laborers, and about 20 thousand people were tortured and died in concentration camps.

On August 15, 1997, this monument to the suffering compatriots in Shijiazhuang concentration camp was built in the ruins of the concentration camp and now Ping ‘an Park.

▲ Monument in Ping ‘an Park, Shijiazhuang

However, the heroic people of Shijiazhuang did not give in!

They created one miracle after another in the history of the Anti-Japanese War.

On this day that must be remembered,

Let’s follow these traces of history,

Look at how the people of Shijiazhuang resisted the Japanese invaders!

NO.1

Gaocheng

Slaughter can’t stop the tenacious war of resistance

On the morning of October 12, 1937, more than 5,000 Japanese troops surrounded Meihua Town, and the invaders began a four-day and three-night massacre. Smoke billowed over Meihua Town, flames were blazing, gunfire, door slamming and the crying of adults and children became one.

It was not until noon on October 15th that the Japanese army left Meihua Town. According to the detailed statistics afterwards, there were 550 families and 2,500 people in Meihua Town. 46 families were killed, killing 1547 people.

The tragedy in Meihua Town aroused the awakening and resistance of Gaocheng people. A month later, the anti-Japanese armed forces organized by the Communist party member Mayutang captured the county seat and captured more than 40 Japanese troops.

In memory of the victims, Meihua Town built the "Martyrs Pavilion" in 1946, which was rebuilt twice in 1968 and 1986, and preserved four large tragic sites-windlass puddle, alkaline water pit, blood well and thirty-six graves.

NO.2

Jingxing

Six strong men’s blood stained Guayun Mountain.

Xiaoliyan Village in Jingxing is the first frontier command post established by Commander Nie Rongzhen in the Hundred Regiments War. On August 20th, 1940, Commander Nie Rongzhen fired the first shot of the attack on Zhengtai Road here.

▲井陉县辛庄乡小里岩村——百团大战纪念碑林所在地

1940年秋,日本侵略军对位于井陉东北部的挂云山展开合围。激战中,中队长李鸿山中弹牺牲,年仅22岁的姑娘吕秀兰勇敢地站出来指挥战斗。六勇士与攻上山的日伪军展开殊死搏斗,终因弹尽粮绝,被逼到悬崖边,宁死不屈,砸碎枪支,纵身跳崖,壮烈牺牲。

他们是共产党员吕秀兰、15岁的儿童团员康三堂、炊事员刘贵子,还有康英英、李书祥和康二旦,后被人们称颂为“挂云山六壮士”。

NO.3

深泽

打响宋庄战役

宋庄位于深泽县东北十五里,并且挖有地道,是个有利于进行村落战的村庄。八路军二十二团两个连和藁无大队一部驻北宋庄,警备旅一个连和晋深极大队一部驻南宋庄。1940年6月7日凌晨三点多,几支部队迎来了残酷的战斗。

善打伏击战的二十二团团长左叶最先发现敌人骑兵,待敌人距阵地三十米时,一挺重机枪、三挺轻机枪和一个掷弹筒,一齐开火,打伤了率三十多个骑兵进村的日军支队长坂本吉太郎。

他们顶住了日伪军两千余人的围攻,以毙伤日伪军三百余人后突围而去。宋庄战役是一次当时在世界乃至后世都备受称颂的伟大战役。

NO.4

晋州

刘胡兰式的女英雄杨岭梅

In the Martyrs Cemetery located in Dongsheng Road, Jinzhou City, stands a 45-meter-high white marble statue of Yanglingmei Martyr. In 1986, General Lv Zhengcao wrote an inscription: "Liu Hulan-style heroine in Jizhong Plain-martyr of Yanglingmei".

Yang Lingmei was born in 1921. She was vigorous, honest and active. She mobilized women to spin and weave, made military clothes and shoes for the Eighth Route Army, and led women to dig ditches and break roads, stand guard, check pedestrians and monitor enemy agents.

At 3 am on April 30, 1940, the Japanese puppet troops suddenly surrounded Nantian Village. In order to protect documents, Yang Lingmei and her colleagues were arrested in Nantian Village. After being arrested, Japanese soldiers locked Yang Lingmei in a big wooden cage and tortured her. The enemy paraded her naked. Yang Lingmei shouted to the crowd in the street: "Folks, follow communist party and defeat the Japanese dog robbers …"

The Japanese army took Yang Lingmei under the wall of Dongguan, and she opened her eyes and shouted, "Down with Japanese imperialism! Down with traitors and traitors! Long live communist party! ….. "Finally, the enemy nailed Yanglingmei to the wall with special big nails.

On May 6th, Yang Lingmei died heroically at the age of 19.

NO.5

positive definiteness

Zhengding people’s "tunnel warfare"

Gaojiazhuang in the movie Tunnel Warfare

It is Gaoping Village in Zhengding!

▼

During the Anti-Japanese War, Zhengding Gaoping Village was a village of fighting and heroes. The Japanese puppet troops besieged Gaoping on a large scale five times, and more than 50 militiamen in Gaoping used tunnel cover to set a brilliant record of fighting the Japanese puppet troops for more than 50 times, killing 400 Japanese puppet troops and defeating thousands of enemies for five attacks.

Bury the mines and carry the guns.

You drill the tunnel, I’ll go to the room.

Commanding point, blocking the street wall.

Form a barrage,

Although we are plowmen,

Beat the devil into my village again.

More than 70 years ago, villagers in Zhengding Gaoping wrote and sang this authentic battle song and waged a resolute struggle with the enemy! The most beautiful battle fought by Gaoping people took place on May 4, 1945. For more than 1600 days, the puppet troops surrounded the village in an attempt to destroy the tunnel and subdue Gaoping.

▲ Zhengding Gaoping County Tunnel

The village militia quickly entered the combat post, and men, women and children immediately got into the tunnel. In the face of the enemy’s fierce attack, the militia beat the enemy with grenades at the commanding heights of the roof. In this battle, Gaoping militia killed 59 Japanese puppet troops, including 4 commanders, and blew up 7 carts.

▲ Zhou Baoquan, who is over 90 years old, used to be the squad leader of Gaoping villagers.

During the Anti-Japanese War, soldiers and civilians in Gaoping Village of Zhengding County killed and wounded more than 2,240 enemies, captured more than 1,590 enemies, and seized more than 2,870 guns and more than 20,000 bullets.

In 1963, the creative group of the film "Tunnel Warfare" interviewed in the village took Gaoping Village as one of the prototype villages of the film "Tunnel Warfare".

NO.6

Lingshou

Lingshou Chen Zhuang annihilation war

The Japanese aggressors committed heinous crimes against the people of Lingshou: they massacred 5,252 people, leaving 2,095 people unaccounted for; Plague caused by bacterial weapons and hunger caused by war killed 10414 people and disabled 3736 people; More than 15,000 laborers …

▲ Japanese weapons seized in Chen Zhuang’s annihilation war

▲ Chen Zhuang fought for six days and five nights.

In September 1939, 1,500 Japanese troops attempted to occupy Chen Zhuang. Under the command of He Long and Nie Rongzhen, it took 6 days and 5 nights to kill more than 1,280 people below the head of the enemy brigade, capture 16 people, and seize 3 mountain guns, 23 light and heavy machine guns, more than 500 rifles and more than 50 war horses.

▲ Villagers in Xiaohanlou Village, Lingshou County made their own soil guns.

NO.7

Hirayama

The ditch beams are full of fighting.

The Japanese army has made more than 50 major tragedies in Pingshan County, killing 14,000 innocent people, covering more than 300 villages in the county.

However, Pingshan, a Taihang Mountain county with a population of only 250,000, has 70,000 people joining the army, 12,000 people joining the Eighth Route Army, and 5,000 martyrs died for their country …

Pingshan is a strong base!

Pingshan people are heroic people!

Hirayama Regiment, the 718th Regiment of the 359th Brigade in the Megatron World.

▼

On September 28th after the July 7th Incident, the Eighth Route Army came to Hongzidian in Pingshan. More than 1700 children of Taihang resolutely left their hometown. This anti-Japanese armed force, known as the "Children’s Army on the Taihang Mountain", was incorporated into the "359 Brigade" led by Wang Zhen, and the Pingshan Group repeatedly made outstanding achievements …

▲ Peasant children heading for the anti-Japanese battlefield

▲ Fan Mingtang, an old soldier of Pingshan Regiment

"When a soldier hits a devil!"

The autumn wind blew this slogan all over the gullies and beams in Pingshan County.

▲ The headquarters of the Japanese army in Pingshan

▲ Dismantle the devil’s turret.

Pay tribute to the hero: return to the big gun class

The militia can also intimidate the enemy.

▼

At the beginning, there were only six members of Pingshan Huishe’s big gun class, and one and a half guns started a revolution. Later, many villagers participated in many villages. They killed the Japanese aggressors, fought against mopping-up, carried artillery towers, and transported public grain. They fought bravely against the Japanese aggressors’ aggression and repeatedly made meritorious military service, which was received by Nie Rongzhen, commander of the military region.

▲ On May 4, 1941, Commander Nie Rongzhen met with the representative of the "big gun class" in Donghuishe.

From its founding to War of Resistance against Japanese Aggression’s victory in 1945, the Big Gun Squad fought against the enemy 257 times, destroyed 8 enemy bunkers, captured 3 enemy strongholds, wiped out 274 Japanese puppet troops, and seized more than 180 guns, 1 small gun and more than 150,000 bullets.

Protect the hero "Wang Erxiao" of Xinhua Radio and Newspaper with his life.

▼

"Cattle are still grazing on the hillside, but the cattle herders don’t know where they are …" This song, written in the autumn of 1942, was sung all over China. One of the archetypes of the little hero "Wang Erxiao" in the song is Yan Fuhua, a native of Nangonglonggou Village, Zhabei Township, Pingshan County.

Shi Linshan, an 80-year-old man, will never forget that he witnessed the scene that 13-year-old Yan Fuhua (second brother) was picked off the cliff by the bayonet of the Japanese devil and the river was dyed red with blood …

At that time, Xinhua Radio Station (predecessor of china national radio), Shanxi-Chahar-Hebei Daily (predecessor of People’s Daily) and some revolutionary armed forces were stationed in the nearby Gunlonggou village. "In order to protect everyone’s smooth transfer, the second brother has been dragging the devil around the mountain to delay the time."

Finally, Yan Fuhua took a group of devils to the top of Erdaoquan, and everyone had no choice. 13-year-old Yan Fuhua suddenly hugged the thigh of a devil around him and wanted to jump off a cliff with the enemy! A cold bayonet quickly stabbed him from behind, and in an instant, Yan Fuhua was picked off a cliff more than 20 meters high by the devil!

▲ Recalling the scene of the second brother’s sacrifice, Shi Linshan, who is in his 80 s, burst into tears.

It was not until the evening that Shi Linshan and the villagers found Yan Fuhua’s body from the bottom of the cliff and buried it. "No matter the old man or the child, everyone cried." Shi Linshan said with tears in his eyes that the river at the bottom of the cliff was really dyed red …

Such a story can’t be told for three days and nights.

There are Wuji, Zhaoxian, Gaoyi, yuanshi county,

Praise the emperor, promote the Tang Dynasty, and enjoy new music …

Such battles take place on the land of Shijiazhuang every day.

We must not forget the invaders,

Don’t forget the hero’s children in Shijiazhuang!

Remember history and pay tribute to heroes!

Shijiazhuangfabu is integrated from WeChat WeChat official account Beiyang House, Hebei Daily, Shijiazhuang Daily, etc.

Operation | Shijiazhuang Radio and TV Station New Media Center

Editor | Chen Zhi

Wonderful review of the past

Municipal Government Emergency Office @ All Shijiazhuang people: There will be heavy rainfall in our city during the day from tonight to the 15th.

Find someone! CCTV likes them! Do you know anyone?

Hot hot! Shijiazhuang summer resort collection! Where do you want to go most? Come and vote!